Consider the Two-state Markov Chain With the Following Transition Matrix

The following data states the number of ATM centres during 19952001. 1995 1996 1997 1998.

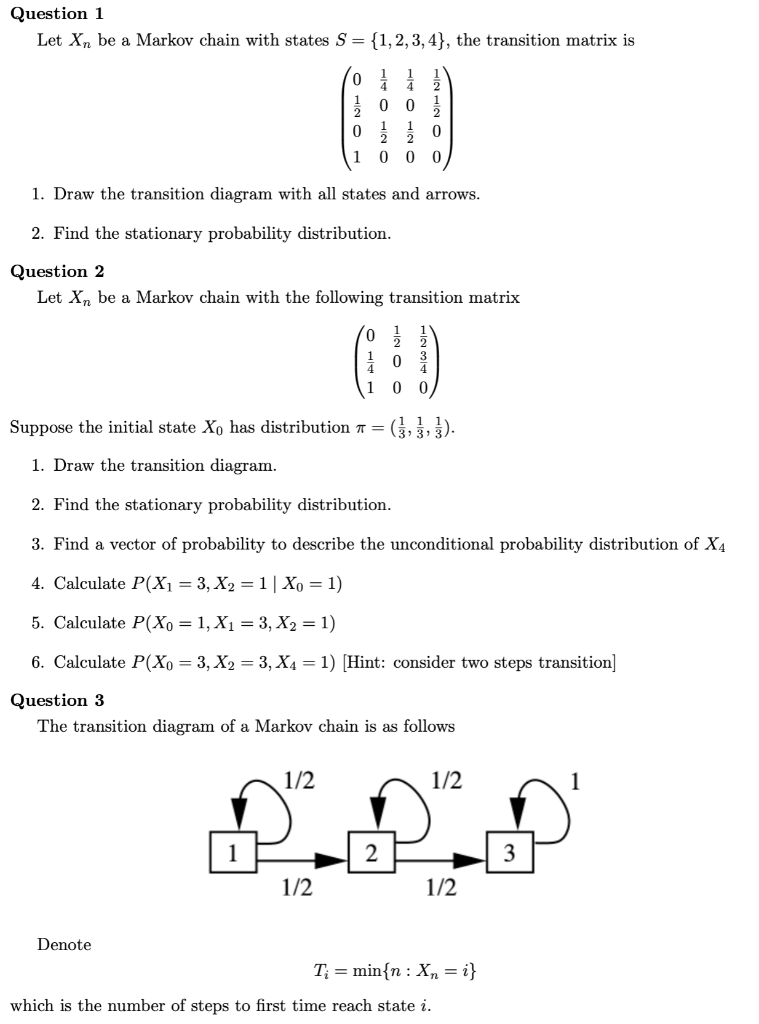

Solved Question 1 Let Xn Be A Markov Chain With States S Chegg Com

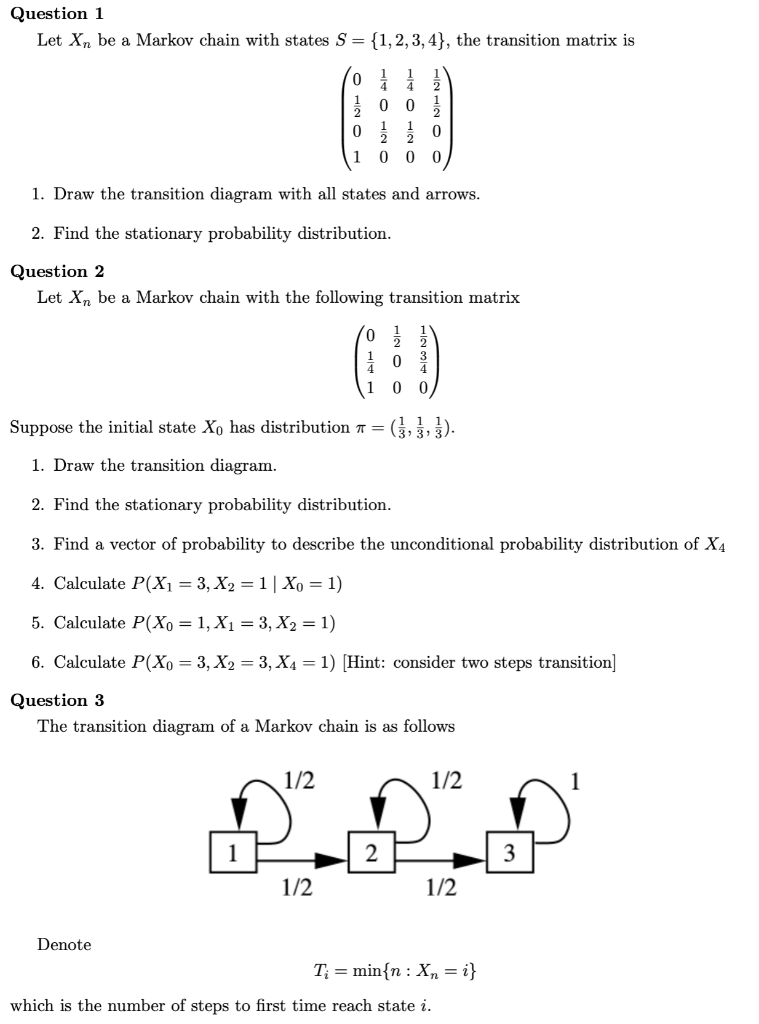

Statistics and Probability questions and answers.

. However in a nut shell the Markov Property is when the probability of transitioning to the next state only depends on the current stateThe system is memoryless. first H then D then Y. A Markov chain is called ergodic if there is some power of the transition matrix which has only non-zero entries.

Consider a Markov chain with the following transition probability matrix P. We first form a Markov chain with state space S HDY and the following transition probability matrix. C Compute the two step transition matrix of the Markov chain.

Show that all the eigenvalues of M are bounded by 1 and that the uniform distribution is the unique stationary probability distribution for M. Depending on the notation one requires either that row sums or column sums add to one with nonnegative entries. Consider the Markov Chain with the following transition matrix.

Transition Matrix list all states X t list all states z X t1 insert probabilities p ij rows add to 1 rows add to 1 The transition matrix is usually given the symbol P p ij. A Markov Chain has two states A and B and the following probabilities. Is this chain irreducible.

Figure 1120 - A state transition diagram. Markov Chains - 16 How to use C-K Equations To answer the following question. Regular Markov Chains and Steady States.

Find the stationary distribution for this chain. The state transition matrix P has this nice property by which if you multiply it with itself k times then the matrix Pk represents the probability that system will be in state j after hopping through k number of transitions starting from state i. Is the stationary distribution a limiting distribution for the chain.

N black balls and n white balls are placed in two urns so that each urn contains n balls. 50 05 05 05 0 a Draw the transition diagram of the Markov chain. For example consider the following transition probabilities matrix for a 2-step Markov process.

A Markov chain has the transition probability matrix 03 02 05 05 01 04 05 02 03 Given the. 2 Model and sufficiënt conditions Consider a Markov chain Xt t-012 with state space N 12 and one-step transition probability matrix P p ij. The following is an example of an ergodic Markov Chain.

In this article we will discuss The Chapman-Kolmogorov Equations and how these are used to. First write down the one-step transition probability matrix. A Markov Chain is a sequence of time-discrete transitions under the Markov Property with a finite state space.

For example it is possible to go from state 0 to state 2 since That is one way of getting from state 0 to state 2 is to go from state 0 to. A Regular Transition Matrix and Markov Chain A transition matrix T is a regular transition matrix if for some k if k T has no zero entries. Example 49 Consider the Markov chain consisting of the three states 0 1 2 and having transition probability matrix It is easy to verify that this Markov chain is irreducible.

The ijth entry of the matrix Pn gives the probability that the Markov chain starting in state iwill be in state jafter. Then use your calculator to calculate the nth power of this one-. Give a reason for your answer.

D What is the state distribution 72 for t 2 if the initial state. P 8 0 22 7 13 3 4. Two states are said to communicate if it is possible to go from one to another in a finite.

For finite state space the transition matrix needs to satisfy only two properties for the Markov chain to converge. C What is the state distribution 72 for t 2 if the initial state distribution for t 0 is To 06 03 01. Consider the Markov chain shown in Figure 1120.

Is this chain aperiodic. At each stage one ball is selected at random from each urn and the two balls interchange. So transition matrix for example above is.

With transition matrix and. B Compute the two step transition matrix of the Markov chain. The first column represents state of eating at home the second column represents state of eating at the Chinese restaurant the.

It is the most important tool for analysing Markov chains. The matrix describing the Markov chain is called the transition matrix. Consider the Markov chain with the following transition matrix.

The matrix is called the Transition matrix of the Markov Chain. Consider the Markov chain with the following transition matrix. An irreducible Markov Chain is a Markov Chain with with a path between any pair of states.

If it starts at A it stays at A with probability 1 3 and moves to B with probability 2 3. If it starts at B it stays at B with probability 1 5 and moves to A with probability 4 5. A Regular chain is defined below.

Suppose that M is symmetric and entry-wise positive. These bounds guarantee a rate of convergence as the truncation limit tends to infinity and enable one to determine an è priori truncation limit for any desired accuracy. In the transition matrix P.

I got following question from someone Consider a homogeneous regular Markov chain with state space S of size S and transition matrix M. Solution for A Markov chain has the transition probability matrix 03 02 05 05 01 04 05 02 03 What is Pr X. Begingroup The state transition matrix for a Markov chain is stochastic so that an initial distribution of states probabilities are transformed into another such discrete set.

What is the probability that starting in state i the Markov chain will be in state j after n steps. B Is the Markov chain ergodic. View mcexsolpdf from DATA 405 at University of British Columbia Okanagan.

Note that the columns and rows are ordered. Define to be the probability of the system to be in state after it was in state j at any observation. Another special property of Markov chains concerns only so-called regular Markov chains.

Markov Chains Solutions Consider the Markov chain X0 X1 X2. Exercise 223 Transition matrix for some physical process Write the transition matrix of the following Markov chains. 0 1 0 13 13 13 05 05 0 has a Draw the transition diagram of the Markov chain.

0 05 05 0.

Solved Assume That X 0 X 1 Is A Two State Markov Chain On Chegg Com

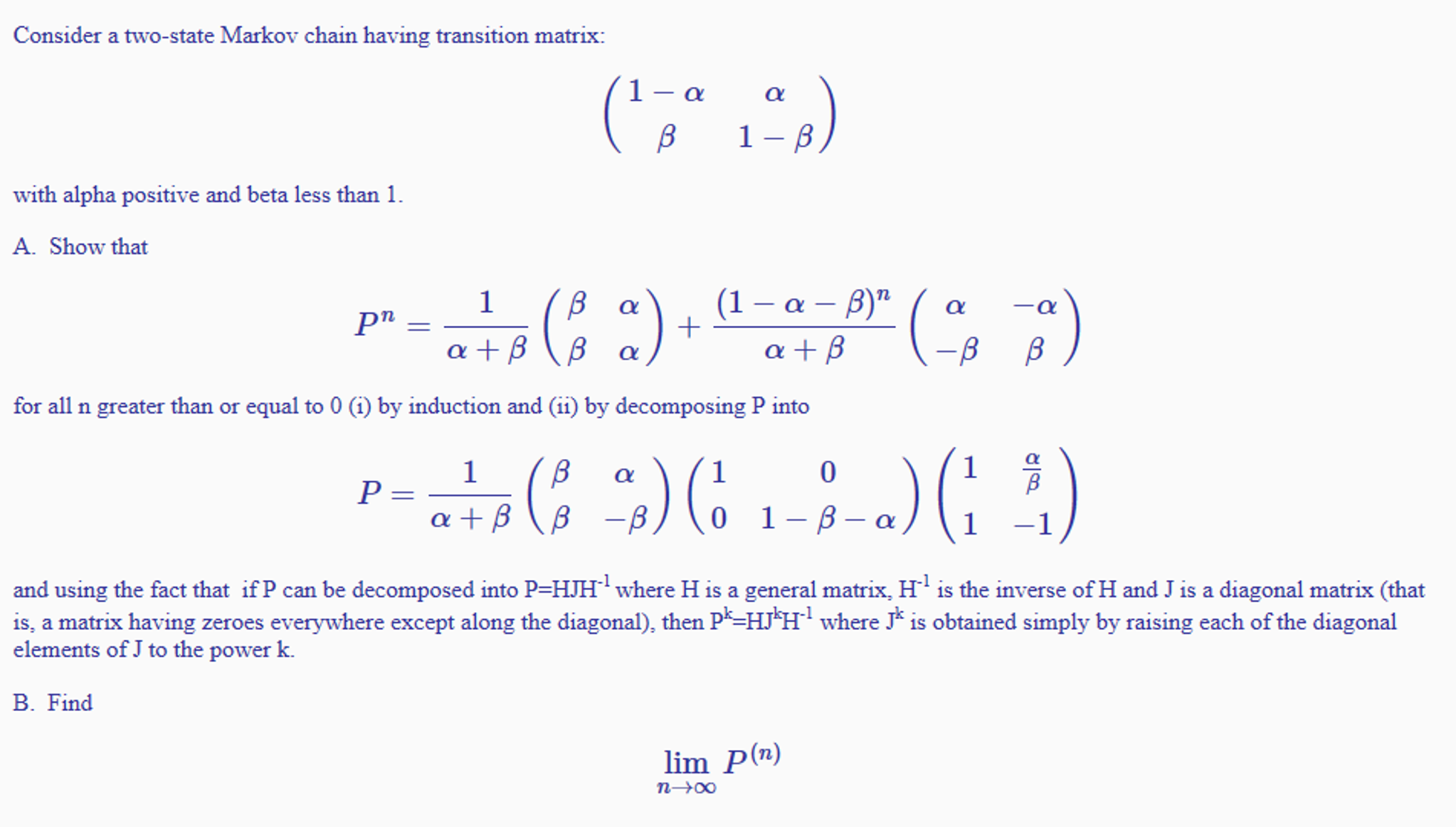

Solved Consider A Two State Markov Chain Having Transition Chegg Com

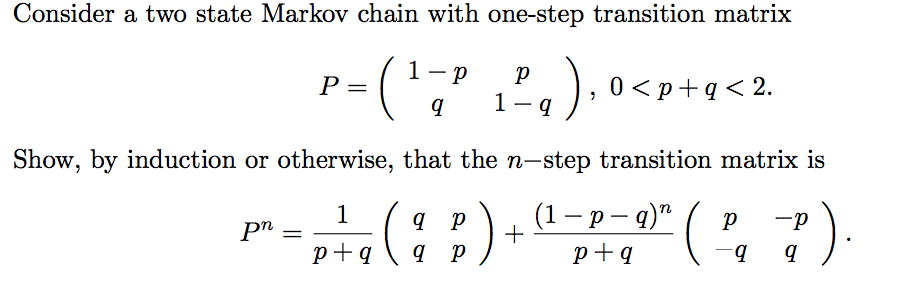

Solved Consider A Two State Markov Chain With One Step Chegg Com

Comments

Post a Comment